Agentic AI: Trust Calibration Framework

Designing an auditable 'Triaged Interaction Model' to solve the Agency-Control Paradox in autonomous networks.

Problem

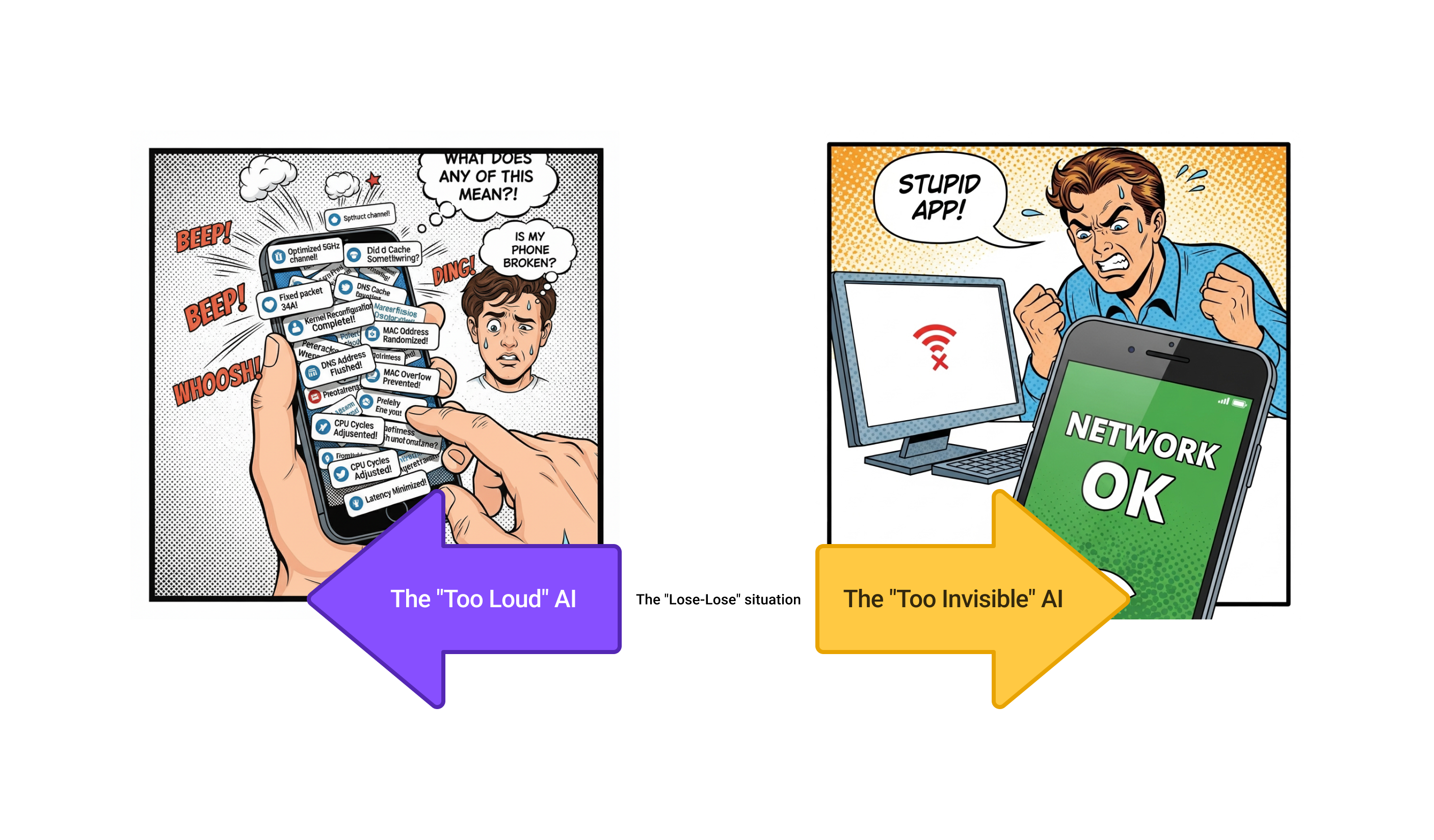

The "Agency-Control Paradox"

In a self-healing autonomous network, I discovered a core paradox:

• Too Invisible (Black Box): If the AI acts silently, users perceive no value and feel a loss of control ("Is it working?").

• Too Loud (Cognitive Overload): If the AI reports every action, it becomes spam, and users disable it.

My Role

As the Sole Product Designer, I defined the Human-AI Interaction Model for the ecosystem, moving beyond simple notifications to a system of Trust Calibration.

Solution

I designed a Triaged Interaction.

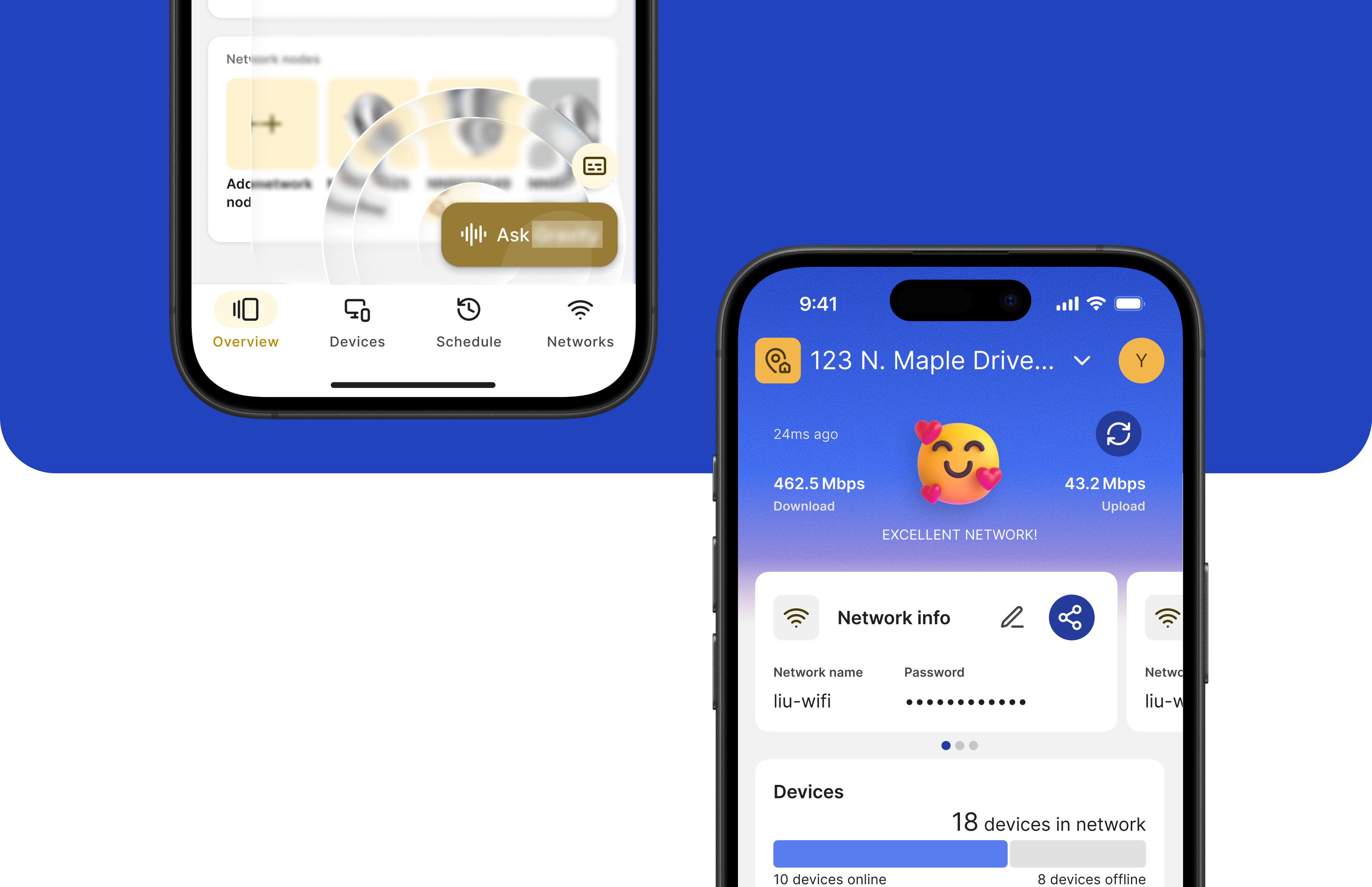

• Trust Ledger: An ambient stream that logs invisible AI actions as "Proof of Work," allowing retrospective auditing.

• Human-in-the-Loop: A seamless fallback flow where the Agent asks for human help when ambiguous, ensuring user control.

Impact

The Righ Ecosystem was successfully acquired by Plume Design. My Agentic Interaction Framework became the company standard, successfully proving that an "invisible" AI agent could still build high user trust and reduce support costs.

The "Agency-Control Paradox" in Autonomous Systems

I don't want to be bothered for every little thing, but I also want to know my Cleo(a company’s network hardware) is actually working. And if something does break, I need to know why, now.

A "Triaged Interaction" Framework for Automation

My research indicated that a binary "On/Off" interaction model fails in autonomous systems. Treating a "Network Optimization" (Low Stakes) the same way as a "Security Threat" (High Stakes) creates cognitive dissonance.

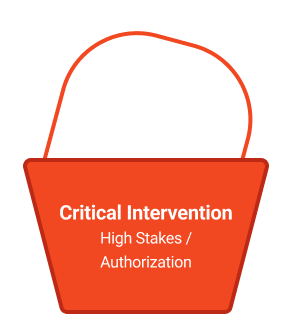

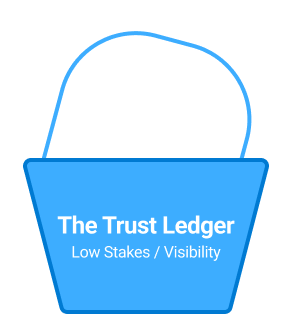

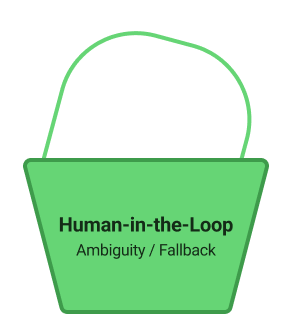

My key contribution was to shift from "Event-Based Design" to "Stake-Based Design." I created the "Triaged Interaction" framework by categorizing all autonomous actions into three distinct "Trust Buckets" based on their urgency and user impact.

This framework provided the complete architectural solution: It allowed me to design three distinct interaction patterns that solve the paradox: Critical Interventions (High Stakes), Trust Ledgers (Low Stakes), and Human-in-the-Loop Escalations (Failure or Proactive States).

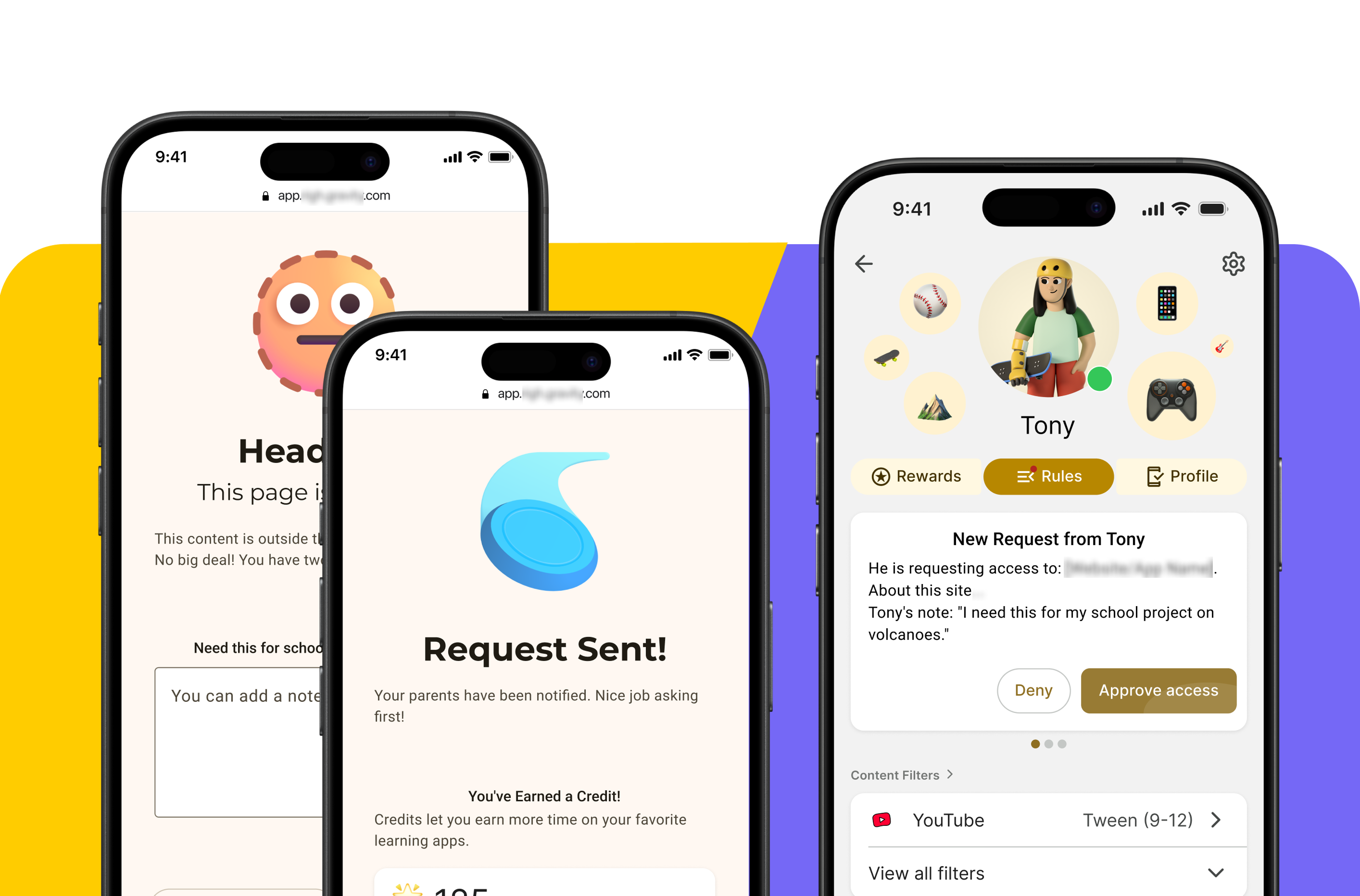

👀 What’s in it? High-risk events requiring human judgment (e.g., Security breaches, System outages).

📢 User’s words: "Stop and ask for my permission before acting."

👀 What’s in it? Invisible autonomous actions (e.g., "Latency optimized", "Routine maintenance").

📢 User’s words: "Don't interrupt me, but leave a record so I can verify your work."

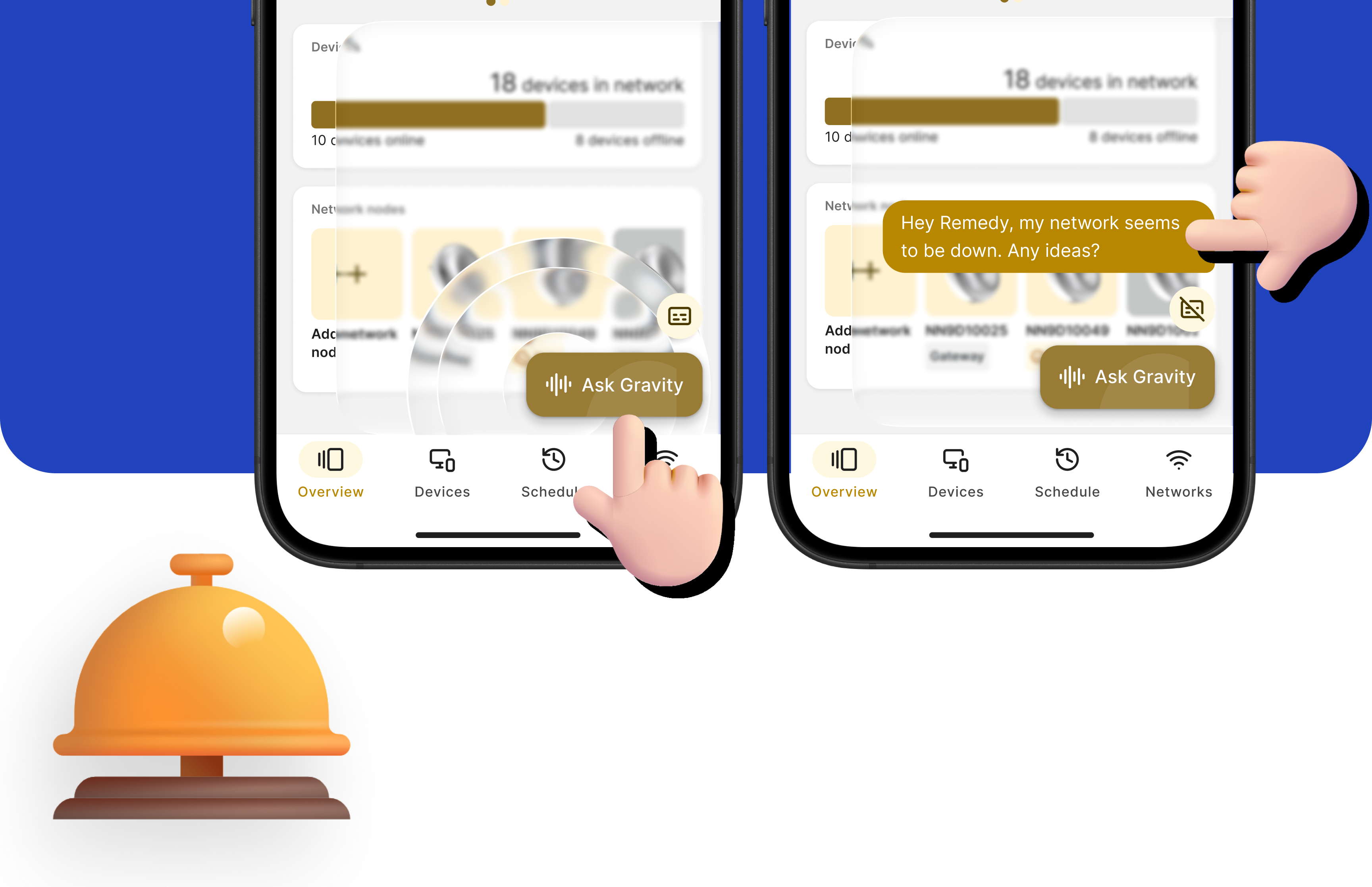

👀 What’s in it? Edge cases where the AI has low confidence or fails to resolve the issue.

📢 User’s words: "The AI is not working; I need to take back manual control." or "I want to setup a prioritized task."

Defining the "Human-Agent Trust Protocol"

To solve the "Agency-Control Paradox," I first had to define the rules of engagement. I analyzed three common automation patterns and found them flawed.

This is the "Too Loud" path. While transparent, exposing every algorithmic decision creates Cognitive Overload. It buries critical signals in a mountain of technical noise.

This is the "Too Intrusive" path. Treating every event as a critical alert erodes trust. It disrupts user focus and leads to "Alert Fatigue," training users to ignore the agent entirely.

This is the "Too Invisible" path. While ideally autonomous, it fails in enterprise contexts. Without Observability, users cannot verify ROI or audit performance, leading to a complete lack of confidence.

A Framework for "Trust Calibration"

My final solution was a "Triaged Interaction" framework that synthesized the three models into a cohesive system, adjusting visibility based on the stakes.

a. The "Trust Ledger" (Visibility Layer)

It replaces the "Full Report" with a passive audit log. It records invisible autonomous actions (like "Optimized Latency") as "Proof of Work," allowing users to verify performance retrospectively without cognitive noise.

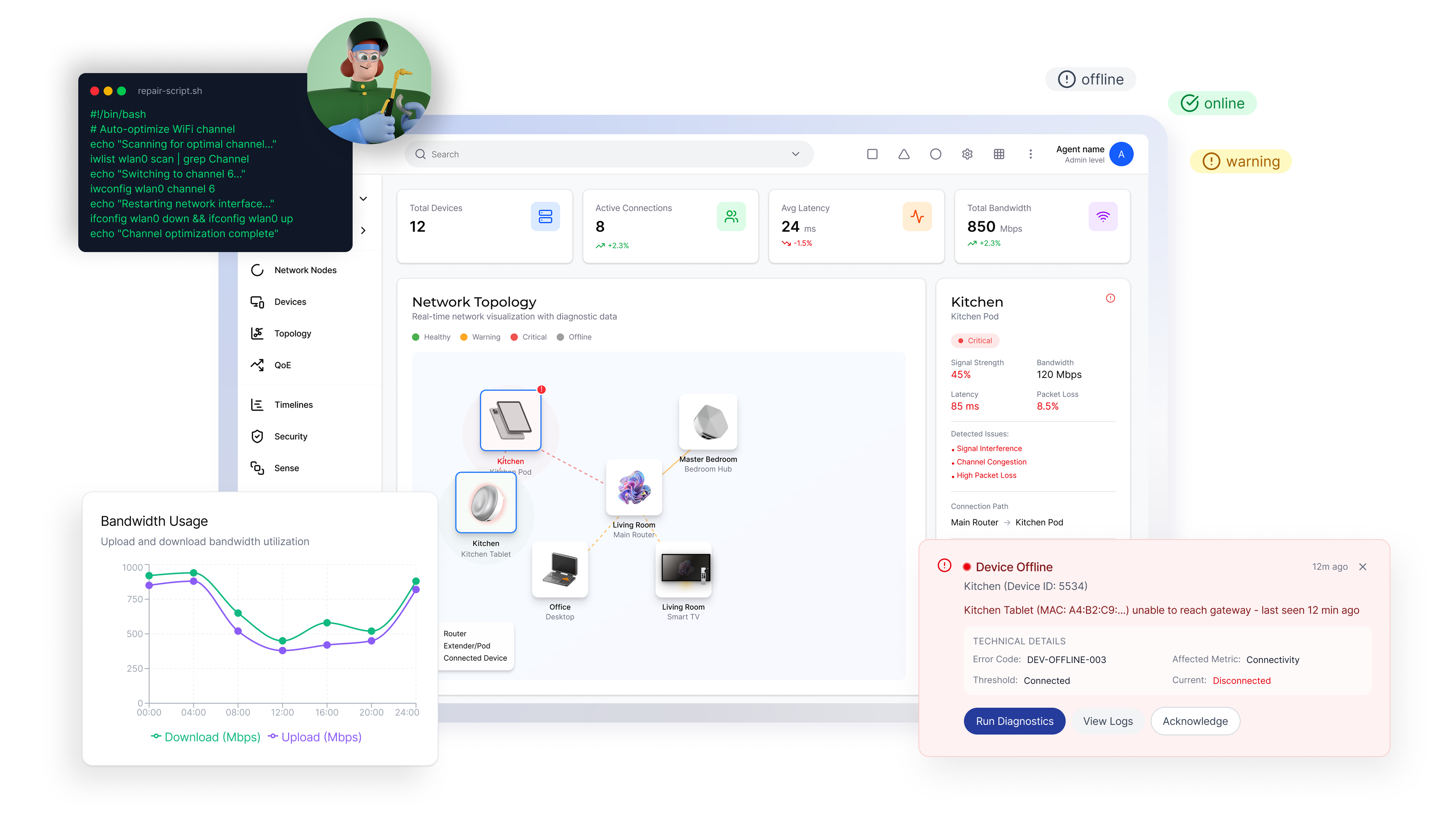

b. Critical Interventions (Permission Layer)

It replaces standard "Push Notifications" with high-friction alerts. The system is only "loud" for high-stakes events (like Security), shifting the paradigm from "Marketing" to "Human Authorization."

c. Human-in-the-Loop Escalation (Safety Layer)

It replaces the "Graceful Handoff" with a safety protocol. When the Agent encounters ambiguity, it pauses and seamlessly hands context to the user, ensuring the system is always Steerable and never a "Black Box."

A Scalable "Trust Protocol" for the Ecosystem

The impact of this project was strategic and validated by the market. As the lead designer, my "Triaged Interaction" framework was not only adopted by leadership as the official standard for all Righ autonomous products but also played a key role in the platform's value proposition during its acquisition by Plume Design.

1. Standardized Interaction Model

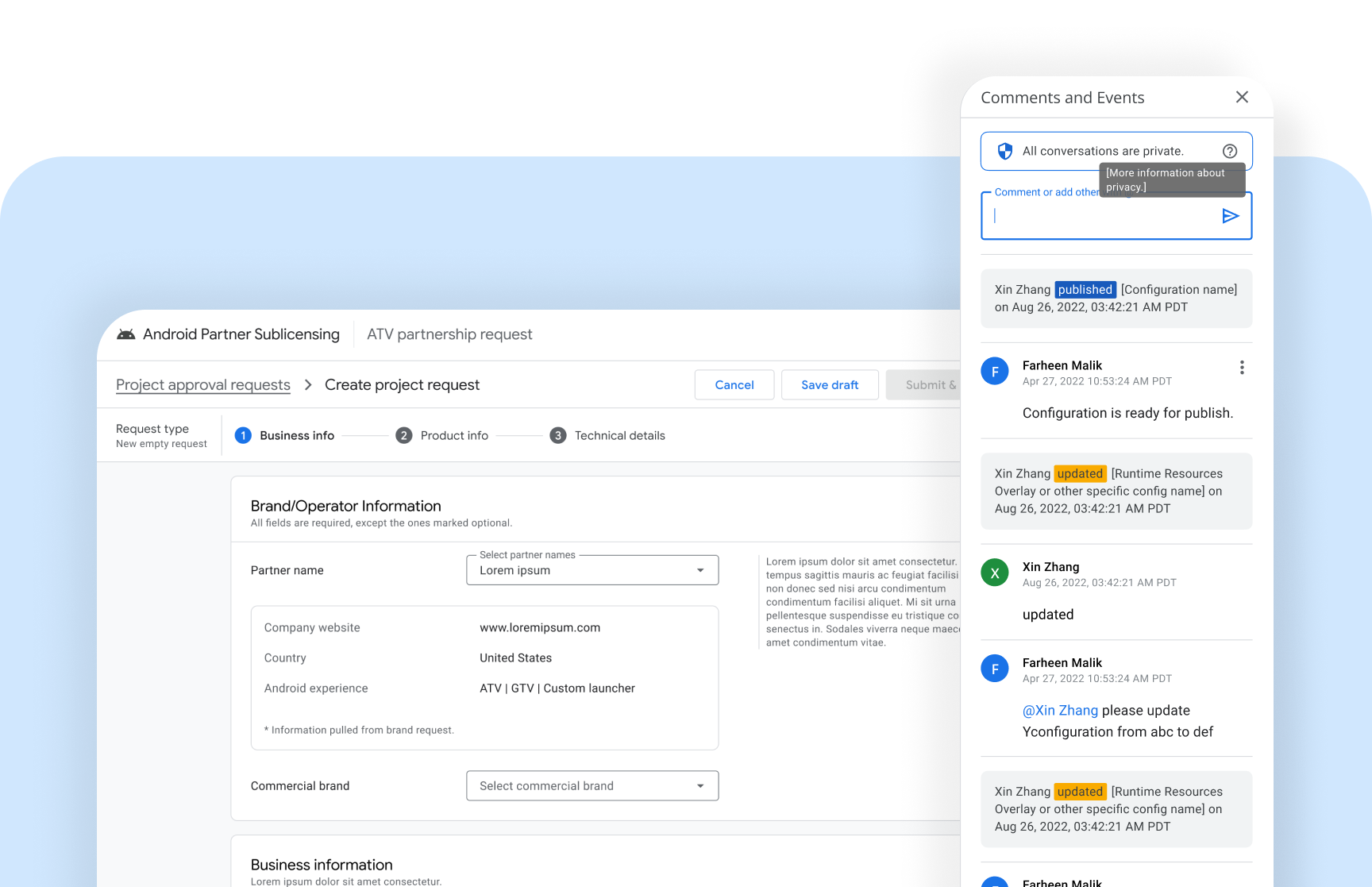

My 3-part framework became the foundational Human-Agent Interaction Guideline for the company. It established a unified language for how all future AI agents would negotiate trust and permission with users across the ecosystem.

2. Solved the "Agency-Control Paradox"

This design provided a repeatable pattern to prove the AI's ROI (via the "Trust Ledger") without causing cognitive overload. It successfully balanced the efficiency of automation with the user's need for control and observability.

My Key Learnings

1. Trust Requires Observability

My biggest learning for B2B automation is that a "Black Box" is a business failure. Stakeholders cannot trust what they cannot see. I learned that "Proof of Work" (via the Trust Ledger) is not just a feature—it is the psychological foundation that allows users to "let go" and delegate tasks to an Agent.

2. Calibration over Consistency

I realized that trust is a spectrum, not a binary switch. We are not just design one "happy path"; we try to design a "Calibration System" that dynamically shifts the Agent's behavior (from Invisible to Loud) based on the risk level of the action.

From Notification to Delegation

This framework set the stage for true Agentic workflows. Once trust was established via the "Ledger," we could move to V2: "Goal-Based Delegation." Instead of just reporting status, the interface evolved to allow users to state high-level intent (e.g., "Optimize for a party tonight"), trusting the Agent to execute the complex sub-tasks autonomously.