Privacy-First AI Architecture

Defining a hybrid 'On-Device vs. Cloud' strategy to solve ethical constraints in family safety tools.

Problem

Traditional "parental control" apps are adversarial. They break family trust and fail to adapt to a child's maturity, forcing kids to find workarounds.

My Role

As the Sole Product Designer, I led the end-to-end process, from user research and defining my ethical design principles to the final UX/UI.

Solution

Instead of a power-hungry cloud AI, I designed a privacy-first hybrid solution built for the core on-device AI constraint. My model provides an "appealing exit" (redirection) while also respecting user intent with a "permission" flow.

Impact

My research and "Proof of View" (POV) prototype, built around this privacy-first model, successfully secured leadership buy-in. The design was adopted as the official product strategy and entered the development pipeline.

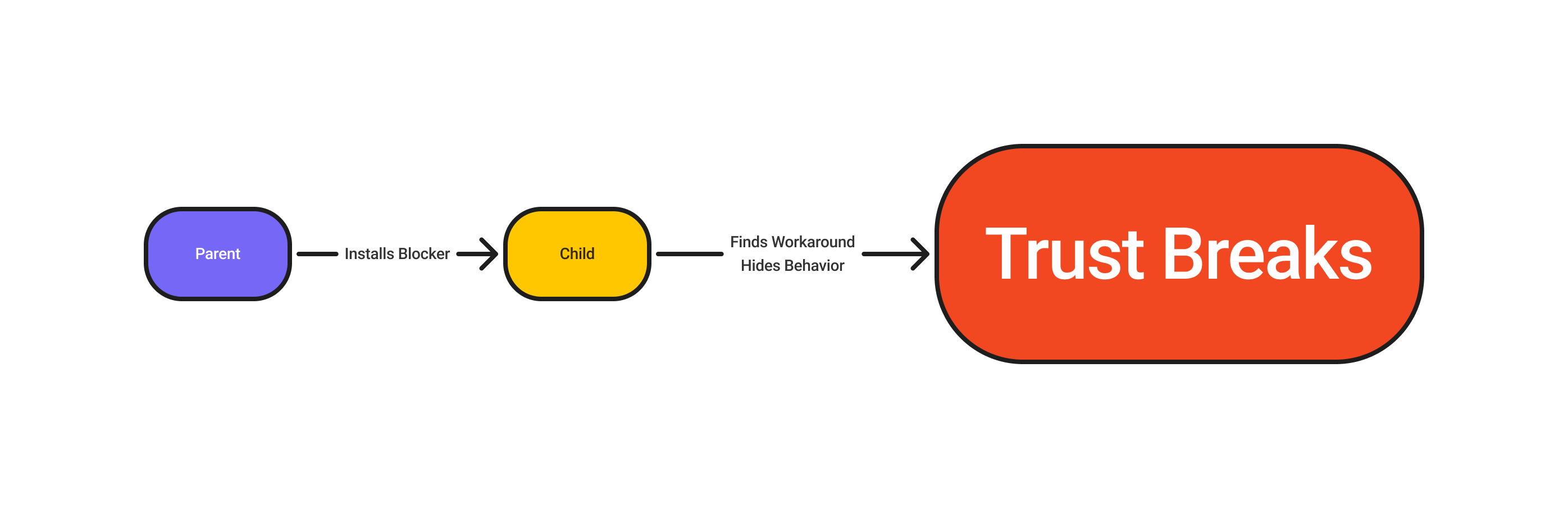

"Control" Backfires and Breaks Trust

My initial research confirmed this: today's "parental control" market is built on an adversarial model. These tools are binary and blunt, treating a curious 16-year-old the same as a 10-year-old. This one-size-fits-all approach not only fails to adapt to a child's maturity, but it actively damages family trust and leads to high customer churn.

This led to a critical question: How might we build a tool that increases family trust instead of destroying it?

My son always finds a way... one time I found out he saved his allowance and bought a cheap Android phone just to get around the rules. I only discovered it when I saw an 'unknown device' in my network app.

The "Blocker" Model Is a Failed Arms Race

To understand the 'why' behind this broken trust, I conducted 1:1 interviews with two key user groups: parents and their teens (aged 13-17). My research indicated that simply blocking content backfires. It triggers defiance (the 'forbidden fruit' effect) and motivates teens to find workarounds, hiding their behavior even more. My findings showed that teens, as resourceful digital natives, were nearly 100% successful at bypassing simple blockers.

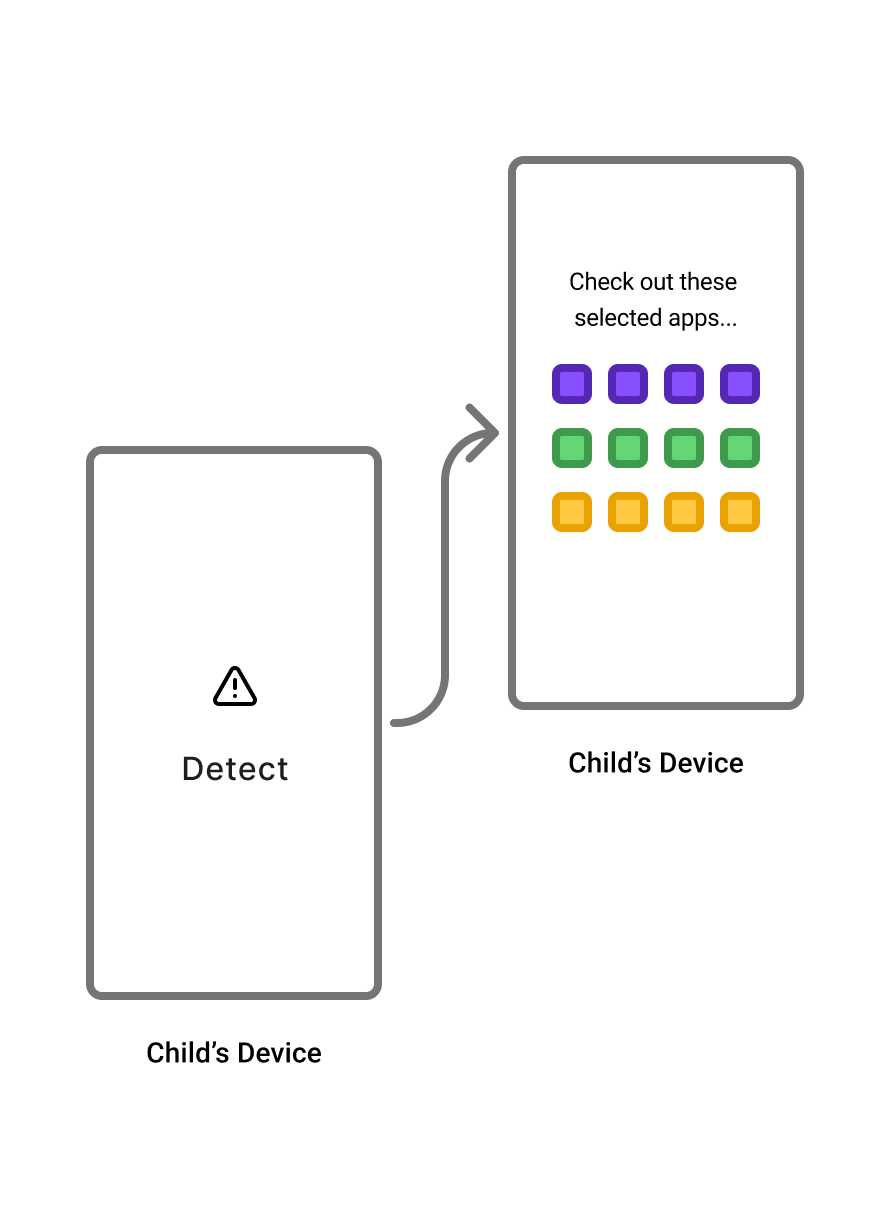

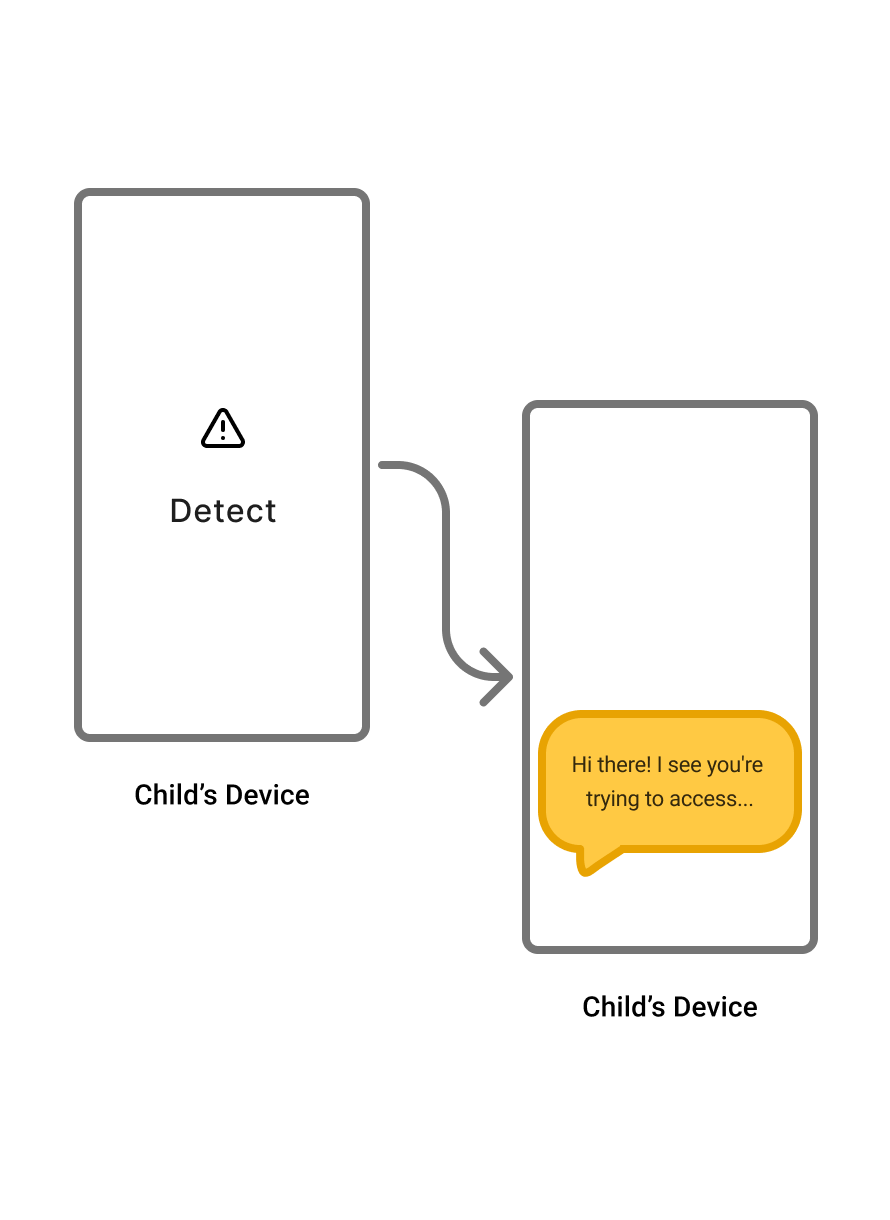

The real opportunity wasn't to build a better wall, but a better door. Kids were open to guidance, but only if it respected their autonomy and wasn't a dead end. They needed an appealing 'off-ramp' to safer content.

This insight became our guiding principle: 'Guide, Don't Police.' This is a problem of context, timing, and tone—a challenge for an general AI to become am adapivtive AI.

From Insight to Principles: Setting the Rules for the AI

Before drawing a single screen, I translated my 'Guide, Don't Police' insight into three core design principles. These rules became the strategic compass for the project and aligned all stakeholders on the vision.

Guide, Don't Police

Always provide a path forward (an "appealing exit"), never a dead end.

Respect Autonomy

The AI shall adapt rules based on behavior and maturity, not just a fixed age.

Enable Conversation

Give parents insights for "teachable moments," not just punitive alerts.

Balancing Privacy (On-Device)

vs.

Power (Cloud AI)

• Pro: Opens a dialogue (Principle #3).

• Con: Still a "dead end" for the user's immediate task.

• Pro: Solves "Forbidden Fruit" effect (Principle #1).

• Con: Ignores the user's original intent.

• Pro: Most powerful, conversational solution. Adapts to nearly all situations.

• Con: It turns out that this option violates our product's core promise of on-device privacy. Would require sending sensitive family browsing data to the cloud.

An Elegant, Privacy-First Hybrid

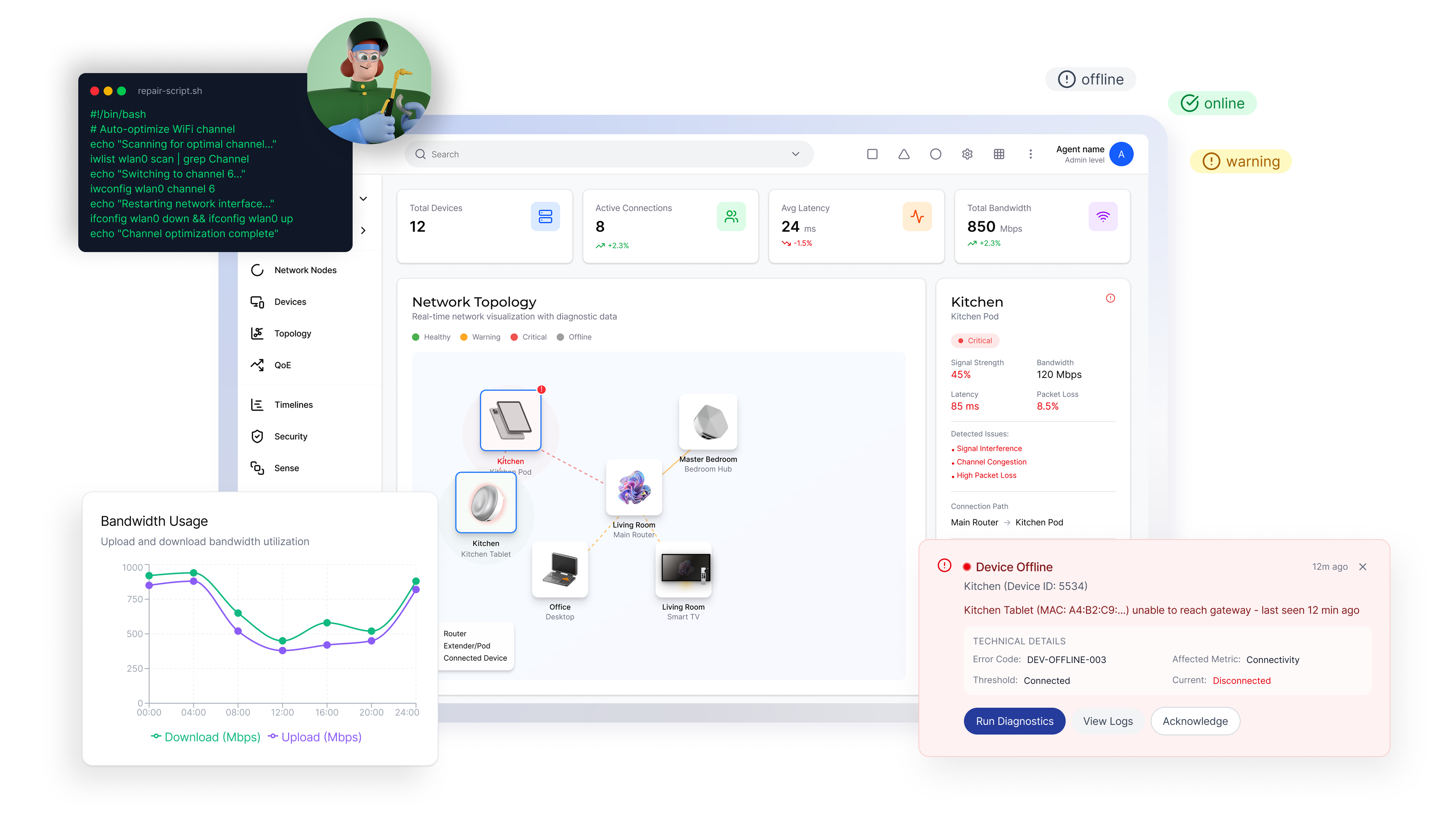

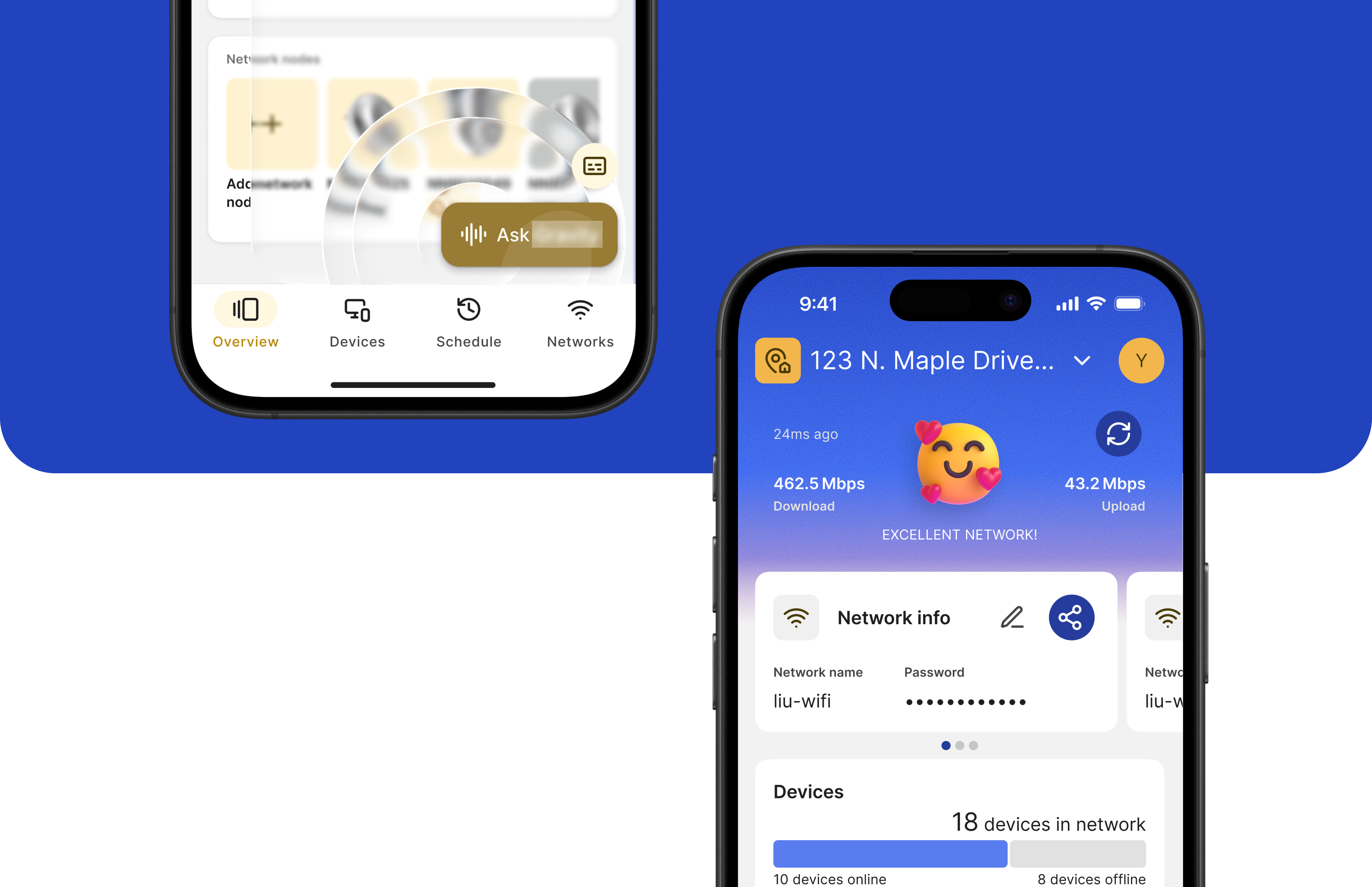

My final design is a lightweight hybrid model that delivers 100% of the user value without compromising on privacy. It provides the 'appealing exit' for casual browsing and the 'permission' flow for legitimate needs.

Crucially, this entire experience was designed to be lightweight enough to run on our on-device AI. It avoids the ethical and financial costs of a cloud-based model, reinforcing our brand's promise of data safety. This privacy-first POV was adopted by leadership and moved into the development pipeline.

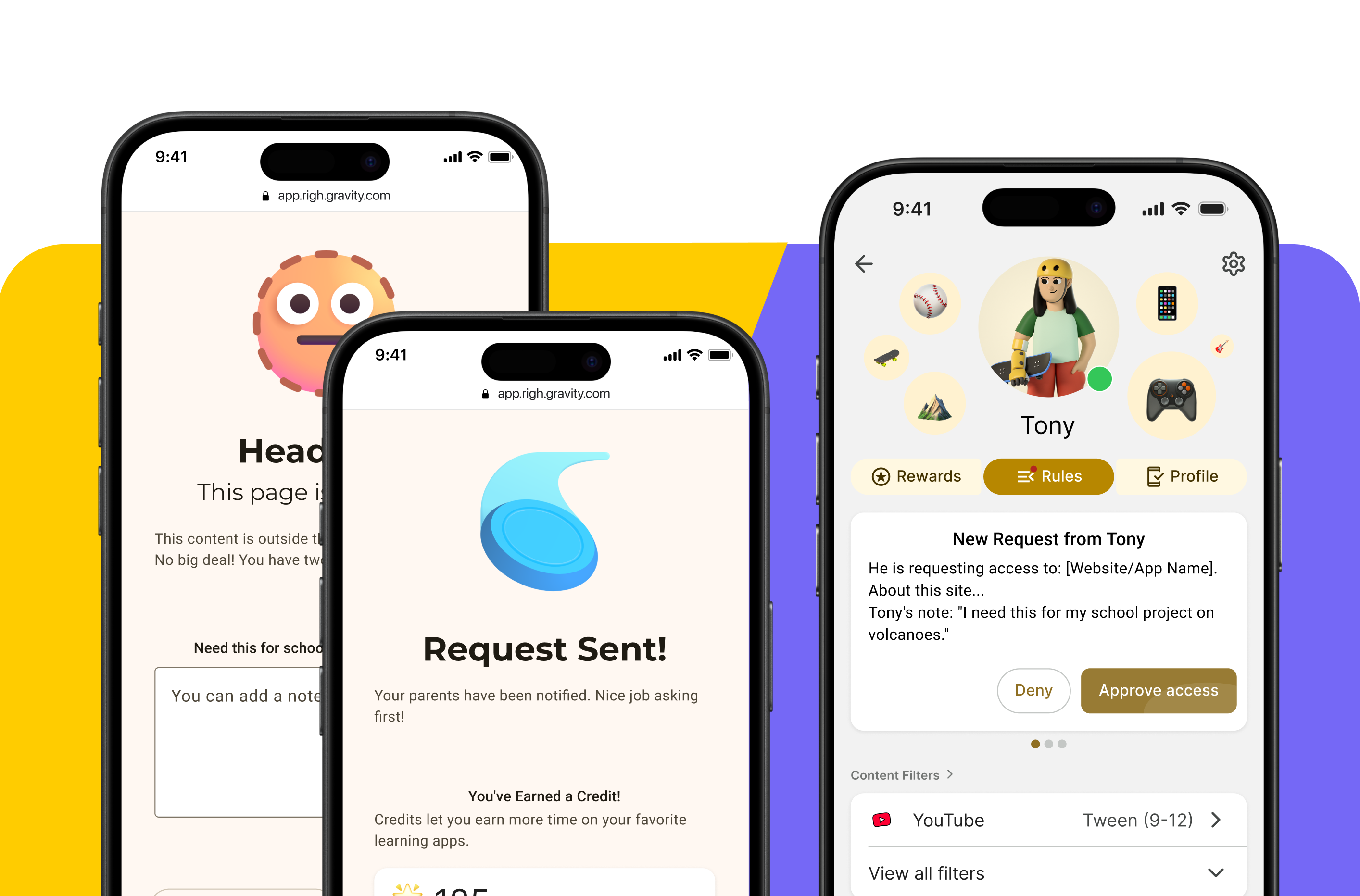

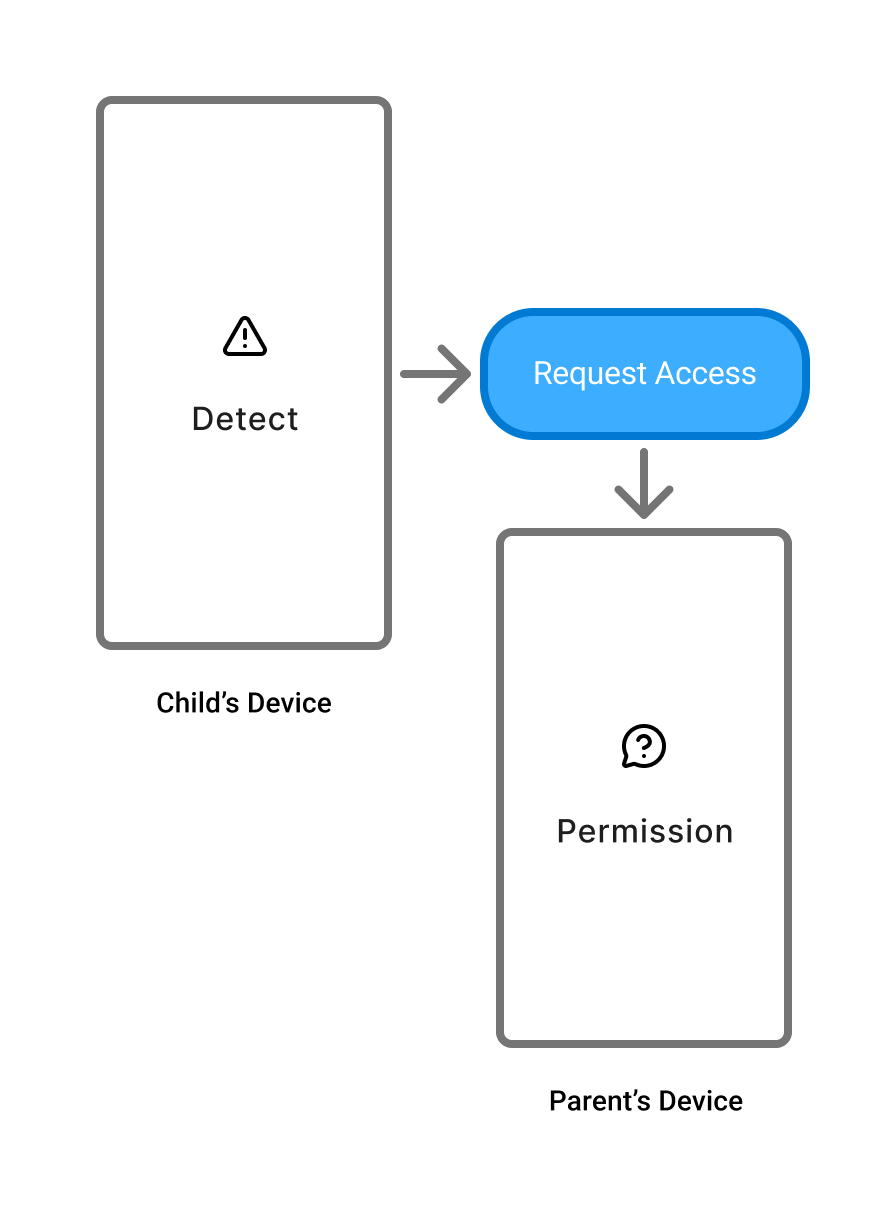

A "Dual-Path" Solution for Child and Parent

The Child's Side (Tony's Flow)

When a child encounters a restricted page, my hybrid design gives them two 'calm' paths instead of an adversarial block: a 'Permission' flow and a 'Redirection' flow. This respects their autonomy and provides an 'emotional exit.

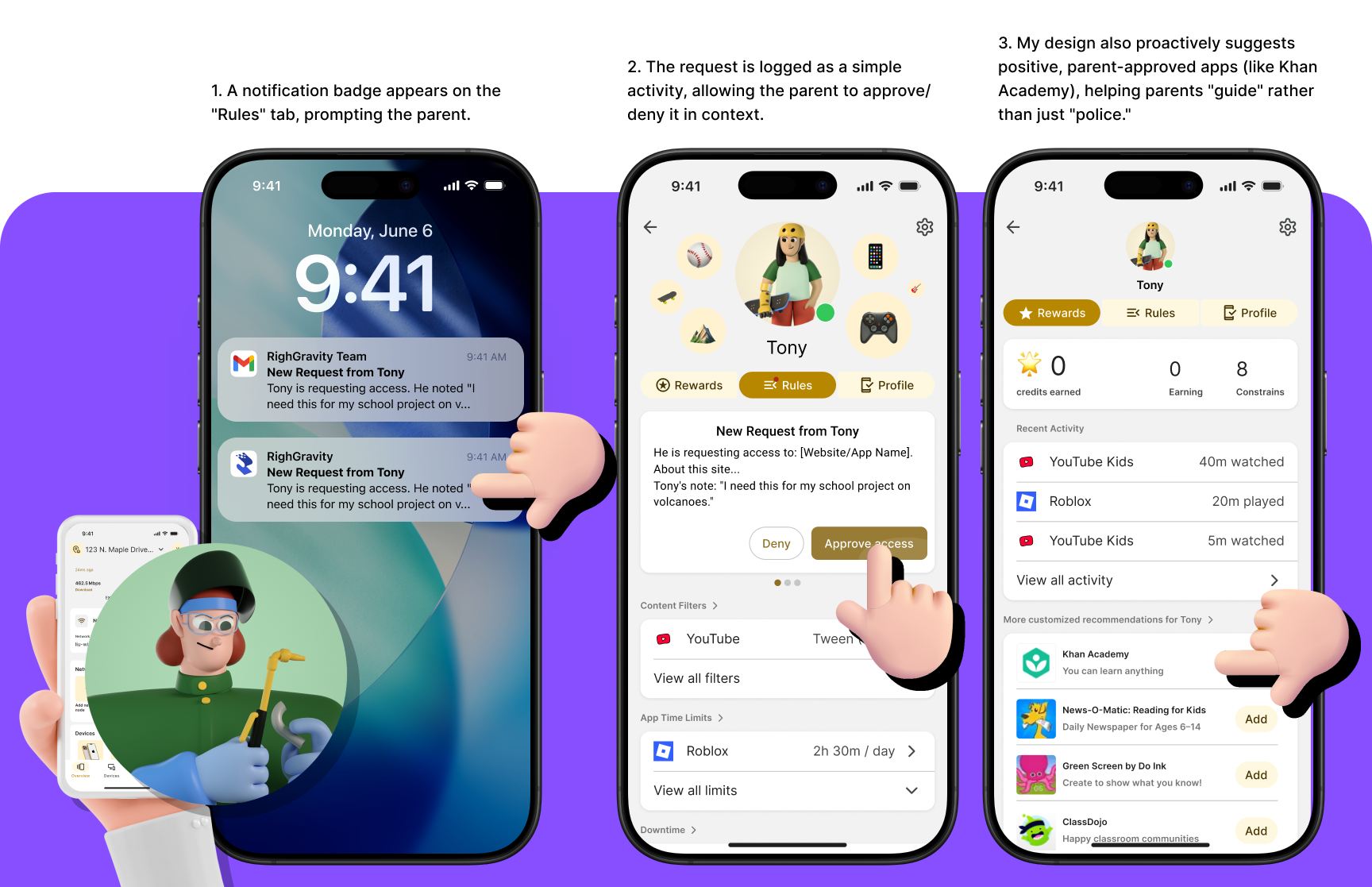

The Parent's Side (Lisa's Flow)

The child's request appears as a simple, non-urgent task on the parent's app. This turns a potential conflict (a 'block') into a 'conversation starter,' fulfilling my 'Enable Conversation' principle.

Outcomes: From "Proof of View" to Official Strategy

As the sole designer, my final 'Proof of View' successfully demonstrated that I could solve the complex ethical problem of child safety without compromising our core user promise of on-device privacy. The design was adopted by leadership and moved into development.

Leadership Buy-In

Impact: My privacy-first hybrid model was presented to leadership and adopted as the official product strategy.

Greenlit for Development

Impact: The POV was successful, and the project was greenlit for the development pipeline.

My Key Learnings

Embrace the Techinical Constraint

The on-device AI constraint seemed like a weakness at first, but it became my greatest strength. It forced me to design a more elegant, ethical, and privacy-first solution than a 'do-anything' cloud AI would have.

AI Ethics in Delicate Relationship

Designing for two users (parent and child) means a 'win' isn't about one side being happy, but about building a bridge between them.